Table of Contents

- Neuralnetlib

- 📝 Description

- 📦 Features

- ⚙️ Installation

- 💡 How to use

- Basic usage

- Advanced usage

- 🚀 Quick training examples (more here)

- 📜 Some outputs and easy usages

- Here is the decision boundary on a Binary Classification (breast cancer dataset):

- Here is an example of a model training on the mnist using the library

- Here is an example of a loaded model used with Tkinter:

- Here, I replaced Keras/Tensorflow with this library for my Handigits project...

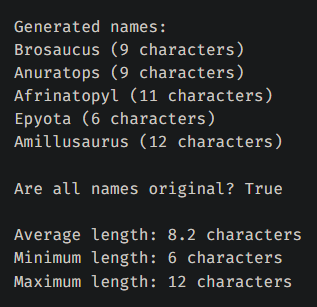

- Here is the generated dinosaur names using a simple RNN and a list of existing dinosaur names.

- Here are some MNIST generated images using a cGAN.

- ✏️ Edit the library

- 🎯 TODO

- 🐞 Know issues

- ✍️ Authors

Neuralnetlib

📝 Description

This is a handmade machine and deep learning framework library, made in python, using numpy as its only external dependency.

I made it to challenge myself and to learn more about deep neural networks, how they work in depth.

The big part of this project, meaning the Multilayer Perceptron (MLP) part, was made in a week.

I then decided to push it even further by adding Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Autoencoders, Variational Autoencoders (VAE), GANs and Transformers.

Regarding the Transformers, I just basically reimplement the Attention is All You Need paper. It theorically works but needs a huge amount of data that can't be trained on a CPU. You can however see what each layers produce and how the attention weights are calculated here.

This project will be maintained as long as I have ideas to improve it, and as long as I have time to work on it.

📦 Features

- Many models architectures (sequential, functional, autoencoder, transformer, gan) 🏗

- Many layers (dense, dropout, conv1d/2d, pooling1d/2d, flatten, embedding, batchnormalization, textvectorization, lstm, gru, attention and more) 🧠

- Many activation functions (sigmoid, tanh, relu, leaky relu, softmax, linear, elu, selu) 📈

- Many loss functions (mean squared error, mean absolute error, categorical crossentropy, binary crossentropy, huber loss) 📉

- Many optimizers (sgd, momentum, rmsprop, adam) 📊

- Supports binary classification, multiclass classification, regression and text generation 📚

- Preprocessing tools (tokenizer, pca, ngram, standardscaler, pad_sequences, one_hot_encode and more) 🛠

- Machine learning tools (isolation forest, kmeans, pca, t-sne, k-means) 🧮

- Callbacks and regularizers (early stopping, l1/l2 regularization) 📉

- Save and load models 📁

- Simple to use 📚

⚙️ Installation

You can install the library using pip:

💡 How to use

Basic usage

See this file for a simple example of how to use the library.

For a more advanced example, see this file for using CNN.

You can also check this file for text classification using RNN.

Advanced usage

See this file for an example of how to use VAE to generate new images.

Also see this file for an example of how to use GAN to generate new images.

And this file for an example of how to generate new dinosaur names.

More examples in this folder.

You are free to tweak the hyperparameters and the network architecture to see how it affects the results.

🚀 Quick training examples (more here)

Binary Classification

# ... Preprocess x_train, y_train, x_test, y_test if necessary (you can use neuralnetlib.preprocess and neuralnetlib.utils)

# Create a model

=

# 10 features

# Compile the model

# Train the model

Multiclass Classification

# ... Preprocess x_train, y_train, x_test, y_test if necessary (you can use neuralnetlib.preprocess and neuralnetlib.utils)

# Create and compile a model

=

# For example, MNIST images

# activation supports both str...

# ... and ActivationFunction objects

# same for loss_function and optimizer

# Train the model

Regression

# ... Preprocess x_train, y_train, x_test, y_test if necessary (you can use neuralnetlib.preprocess and neuralnetlib.utils)

# Create and compile a model

=

# you can either put acronyms or full name

# Train the model

Image Compression

, =

= / 255.

=

, =

=

# Bottleneck

# Output: 14x14x32

# Output: 28x28x16

# Output: 28x28x1

=

Image Generation

# Load the MNIST dataset

, =

= .

# Concatenate train and test data

=

=

# Flatten images

=

# Normalize pixel values

= / 255

# Labels to categorical

=

= 32

=

=

=

=

Text Generation (example here is for translation)

=

= # remove unicode characters

= 1000

=

=

= # else the tokenizer would remove the special characters including ponctuation

= # else the tokenizer would remove the special characters including ponctuation

=

=

=

=

=

=

=

=

=

=

, , , =

=

=

You can also save and load models using the save and load methods.

# Save a model

# Load a model

=

📜 Some outputs and easy usages

Here is the decision boundary on a Binary Classification (breast cancer dataset):

PCA (Principal Component Analysis) was used to reduce the number of features to 2, so we could plot the decision boundary. Representing n-dimensional data in 2D is not easy, so the decision boundary may not be always accurate. I also tried with t-SNE, but the results were not good.

Here is an example of a model training on the mnist using the library

Here is an example of a loaded model used with Tkinter:

Here, I replaced Keras/Tensorflow with this library for my Handigits project...

Here is the generated dinosaur names using a simple RNN and a list of existing dinosaur names.

Here are some MNIST generated images using a cGAN.

You can of course use the library for any dataset you want.

✏️ Edit the library

You can pull the repository and run:

And test your changes on the examples.

🎯 TODO

- Add support for stream dataset loading to allow loading large datasets (larger than your RAM)

- Visual updates (tabulation of model.summary() parameters calculation, colorized progress bar, etc.)

- Better save format (like h5py)

- Add cuDNN support to allow the use of GPUs

🐞 Know issues

Nothing yet! Feel free to open an issue if you find one.

✍️ Authors

- Marc Pinet - Initial work - marcpinet